# Run some setup code for this notebook.

import random

import numpy as np

from cs231n.data_utils import load_CIFAR10

import matplotlib.pyplot as plt

# This is a bit of magic to make matplotlib figures appear inline in the

# notebook rather than in a new window.

%matplotlib inline

plt.rcParams['figure.figsize'] = (10.0, 8.0) # set default size of plots

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray'

# Some more magic so that the notebook will reload external python modules;

# see http://stackoverflow.com/questions/1907993/autoreload-of-modules-in-ipython

%load_ext autoreload

%autoreload 2CS231N

This course is a deep dive into the details of deep learning architectures with a focus on learning end-to-end models for these tasks, particularly image classification

This page contains my solutions and approaches for the assignment All source codes of my solutions are available on GitHub

Multiclass Support Vector Machine exercise

Complete and hand in this completed worksheet (including its outputs and any supporting code outside of the worksheet) with your assignment submission. For more details see the assignments page on the course website.

In this exercise you will:

- implement a fully-vectorized loss function for the SVM

- implement the fully-vectorized expression for its analytic gradient

- check your implementation using numerical gradient

- use a validation set to tune the learning rate and regularization strength

- optimize the loss function with SGD

- visualize the final learned weights

Import necessary packages

CIFAR-10 Data Loading and Preprocessing

# Load the raw CIFAR-10 data.

cifar10_dir = 'cs231n/datasets/cifar-10-batches-py'

# Cleaning up variables to prevent loading data multiple times (which may cause memory issue)

try:

del X_train, y_train

del X_test, y_test

print('Clear previously loaded data.')

except:

pass

X_train, y_train, X_test, y_test = load_CIFAR10(cifar10_dir)

# As a sanity check, we print out the size of the training and test data.

print('Training data shape: ', X_train.shape)

print('Training labels shape: ', y_train.shape)

print('Test data shape: ', X_test.shape)

print('Test labels shape: ', y_test.shape)Training data shape: (50000, 32, 32, 3)

Training labels shape: (50000,)

Test data shape: (10000, 32, 32, 3)

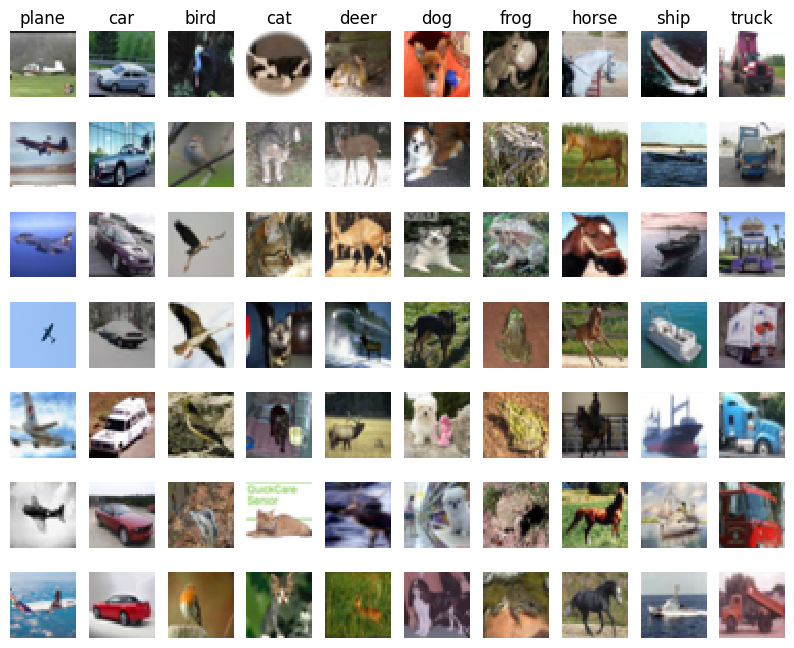

Test labels shape: (10000,)# Visualize some examples from the dataset.

# We show a few examples of training images from each class.

classes = ['plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

num_classes = len(classes)

samples_per_class = 7

for y, cls in enumerate(classes):

idxs = np.flatnonzero(y_train == y)

idxs = np.random.choice(idxs, samples_per_class, replace=False)

for i, idx in enumerate(idxs):

plt_idx = i * num_classes + y + 1

plt.subplot(samples_per_class, num_classes, plt_idx)

plt.imshow(X_train[idx].astype('uint8'))

plt.axis('off')

if i == 0:

plt.title(cls)

plt.show()

# Split the data into train, val, and test sets. In addition we will

# create a small development set as a subset of the training data;

# we can use this for development so our code runs faster.

num_training = 49000

num_validation = 1000

num_test = 1000

num_dev = 500

# Our validation set will be num_validation points from the original

# training set.

mask = range(num_training, num_training + num_validation)

X_val = X_train[mask]

y_val = y_train[mask]

# Our training set will be the first num_train points from the original

# training set.

mask = range(num_training)

X_train = X_train[mask]

y_train = y_train[mask]

# We will also make a development set, which is a small subset of

# the training set.

mask = np.random.choice(num_training, num_dev, replace=False)

X_dev = X_train[mask]

y_dev = y_train[mask]

# We use the first num_test points of the original test set as our

# test set.

mask = range(num_test)

X_test = X_test[mask]

y_test = y_test[mask]

print('Train data shape: ', X_train.shape)

print('Train labels shape: ', y_train.shape)

print('Validation data shape: ', X_val.shape)

print('Validation labels shape: ', y_val.shape)

print('Test data shape: ', X_test.shape)

print('Test labels shape: ', y_test.shape)Train data shape: (49000, 32, 32, 3)

Train labels shape: (49000,)

Validation data shape: (1000, 32, 32, 3)

Validation labels shape: (1000,)

Test data shape: (1000, 32, 32, 3)

Test labels shape: (1000,)# Preprocessing: reshape the image data into rows

X_train = np.reshape(X_train, (X_train.shape[0], -1))

X_val = np.reshape(X_val, (X_val.shape[0], -1))

X_test = np.reshape(X_test, (X_test.shape[0], -1))

X_dev = np.reshape(X_dev, (X_dev.shape[0], -1))

# As a sanity check, print out the shapes of the data

print('Training data shape: ', X_train.shape)

print('Validation data shape: ', X_val.shape)

print('Test data shape: ', X_test.shape)

print('dev data shape: ', X_dev.shape)Training data shape: (49000, 3072)

Validation data shape: (1000, 3072)

Test data shape: (1000, 3072)

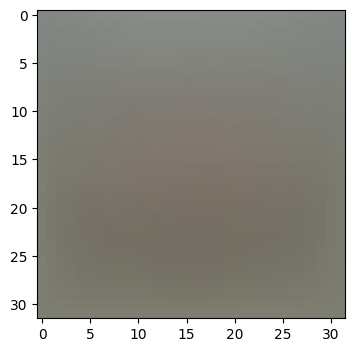

dev data shape: (500, 3072)# Preprocessing: subtract the mean image

# first: compute the image mean based on the training data

mean_image = np.mean(X_train, axis=0)

print(mean_image[:10]) # print a few of the elements

plt.figure(figsize=(4,4))

plt.imshow(mean_image.reshape((32,32,3)).astype('uint8')) # visualize the mean image

plt.show()

# second: subtract the mean image from train and test data

X_train -= mean_image

X_val -= mean_image

X_test -= mean_image

X_dev -= mean_image

# third: append the bias dimension of ones (i.e. bias trick) so that our SVM

# only has to worry about optimizing a single weight matrix W.

X_train = np.hstack([X_train, np.ones((X_train.shape[0], 1))])

X_val = np.hstack([X_val, np.ones((X_val.shape[0], 1))])

X_test = np.hstack([X_test, np.ones((X_test.shape[0], 1))])

X_dev = np.hstack([X_dev, np.ones((X_dev.shape[0], 1))])

print(X_train.shape, X_val.shape, X_test.shape, X_dev.shape)[130.64189796 135.98173469 132.47391837 130.05569388 135.34804082

131.75402041 130.96055102 136.14328571 132.47636735 131.48467347]

(49000, 3073) (1000, 3073) (1000, 3073) (500, 3073)

SVM Classifier

Your code for this section will all be written inside cs231n/classifiers/linear_svm.py.

As you can see, we have prefilled the function svm_loss_naive which uses for loops to evaluate the multiclass SVM loss function.

svm_loss_naive

def svm_loss_naive(W, X, y, reg):

"""

Structured SVM loss function, naive implementation (with loops).

Inputs have dimension D, there are C classes, and we operate on minibatches

of N examples.

Inputs:

- W: A numpy array of shape (D, C) containing weights.

- X: A numpy array of shape (N, D) containing a minibatch of data.

- y: A numpy array of shape (N,) containing training labels; y[i] = c means

that X[i] has label c, where 0 <= c < C.

- reg: (float) regularization strength

Returns a tuple of:

- loss as single float

- gradient with respect to weights W; an array of same shape as W

"""

dW = np.zeros(W.shape) # initialize the gradient as zero

# compute the loss and the gradient

num_classes = W.shape[1]

num_train = X.shape[0]

loss = 0.0

for i in xrange(num_train):

scores = X[i].dot(W)

correct_class_score = scores[y[i]]

for j in xrange(num_classes):

if j == y[i]:

continue

margin = scores[j] - correct_class_score + 1 # note delta = 1

if margin > 0:

loss += margin

# https://miro.com/app/board/uXjVMZSN-iY=/

# incorrect class gradient part

dW[:, j] += X[i]

# correct class gradient part

dW[:, y[i]] -= X[i]

# Right now the loss is a sum over all training examples, but we want it

# to be an average instead so we divide by num_train.

loss /= num_train

dW /= num_train

# Add regularization to the loss.

loss += reg * np.sum(W * W)

dW += 2 * reg * W

#############################################################################

# TODO: #

# Compute the gradient of the loss function and store it dW. #

# Rather that first computing the loss and then computing the derivative, #

# it may be simpler to compute the derivative at the same time that the #

# loss is being computed. As a result you may need to modify some of the #

# code above to compute the gradient. #

#############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

pass

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

return loss, dW# Evaluate the naive implementation of the loss we provided for you:

from cs231n.classifiers.linear_svm import svm_loss_naive

import time

# generate a random SVM weight matrix of small numbers

W = np.random.randn(3073, 10) * 0.0001

# loss, grad = svm_loss_naive(W, X_dev, y_dev, 0.000005)

# print('old')

# loss, grad = svm_loss_naive_old(W, X_dev, y_dev, 0.000005)

loss, grad = svm_loss_naive(W, X_dev, y_dev, 0.000005)

print('loss: %f' % (loss, ))loss: 9.342831The grad returned from the function above is right now all zero. Derive and implement the gradient for the SVM cost function and implement it inline inside the function svm_loss_naive. You will find it helpful to interleave your new code inside the existing function.

To check that you have correctly implemented the gradient, you can numerically estimate the gradient of the loss function and compare the numeric estimate to the gradient that you computed. We have provided code that does this for you:

# Once you've implemented the gradient, recompute it with the code below

# and gradient check it with the function we provided for you

# Compute the loss and its gradient at W.

loss, grad = svm_loss_naive(W, X_dev, y_dev, 0.0)

# Numerically compute the gradient along several randomly chosen dimensions, and

# compare them with your analytically computed gradient. The numbers should match

# almost exactly along all dimensions.

from cs231n.gradient_check import grad_check_sparse

f = lambda w: svm_loss_naive(w, X_dev, y_dev, 0.0)[0]

grad_numerical = grad_check_sparse(f, W, grad)

# do the gradient check once again with regularization turned on

# you didn't forget the regularization gradient did you?

loss, grad = svm_loss_naive(W, X_dev, y_dev, 5e1)

f = lambda w: svm_loss_naive(w, X_dev, y_dev, 5e1)[0]

grad_numerical = grad_check_sparse(f, W, grad)numerical: -14.443537 analytic: -14.443537, relative error: 1.431788e-11

numerical: -19.316876 analytic: -19.316876, relative error: 4.956304e-12

numerical: -32.995300 analytic: -32.995300, relative error: 8.013372e-12

numerical: 21.391909 analytic: 21.485799, relative error: 2.189731e-03

numerical: 6.076235 analytic: 6.076235, relative error: 3.052118e-11

numerical: -5.727065 analytic: -5.727065, relative error: 1.756571e-11

numerical: 5.312886 analytic: 5.312886, relative error: 2.420835e-11

numerical: 11.907314 analytic: 11.814809, relative error: 3.899549e-03

numerical: 12.836863 analytic: 12.836863, relative error: 2.317446e-11

numerical: 16.064514 analytic: 16.064514, relative error: 8.952822e-12

numerical: 21.761711 analytic: 21.761711, relative error: 3.322979e-12

numerical: 18.233244 analytic: 18.233244, relative error: 1.009167e-11

numerical: -9.454372 analytic: -9.454372, relative error: 3.931740e-11

numerical: -4.425528 analytic: -4.425528, relative error: 6.137033e-12

numerical: -6.026364 analytic: -6.026364, relative error: 4.930699e-11

numerical: 6.462703 analytic: 6.369999, relative error: 7.224083e-03

numerical: -1.609149 analytic: -1.609149, relative error: 1.116570e-10

numerical: 2.861964 analytic: 2.939041, relative error: 1.328687e-02

numerical: 4.483437 analytic: 4.483437, relative error: 7.167920e-11

numerical: 24.961296 analytic: 24.961296, relative error: 2.248042e-11Inline Question 1

It is possible that once in a while a dimension in the gradcheck will not match exactly. What could such a discrepancy be caused by? Is it a reason for concern? What is a simple example in one dimension where a gradient check could fail? How would change the margin affect of the frequency of this happening? Hint: the SVM loss function is not strictly speaking differentiable

\(\color{blue}{\textit Your Answer:}\) fill this in.

svm_loss_vectorized

# Next implement the function svm_loss_vectorized; for now only compute the loss;

# we will implement the gradient in a moment.

tic = time.time()

loss_naive, grad_naive = svm_loss_naive(W, X_dev, y_dev, 0.000005)

toc = time.time()

print('Naive loss: %e computed in %fs' % (loss_naive, toc - tic))

from cs231n.classifiers.linear_svm import svm_loss_vectorized

tic = time.time()

loss_vectorized, _ = svm_loss_vectorized(W, X_dev, y_dev, 0.000005)

toc = time.time()

print('Vectorized loss: %e computed in %fs' % (loss_vectorized, toc - tic))

# The losses should match but your vectorized implementation should be much faster.

print('difference: %f' % (loss_naive - loss_vectorized))Naive loss: 9.342831e+00 computed in 0.126752s

Vectorized loss: 9.342831e+00 computed in 0.012110s

difference: 0.000000def svm_loss_vectorized(W, X, y, reg):

"""

Structured SVM loss function, vectorized implementation.

Inputs and outputs are the same as svm_loss_naive.

"""

loss = 0.0

dW = np.zeros(W.shape) # initialize the gradient as zero

#############################################################################

# TODO: #

# Implement a vectorized version of the structured SVM loss, storing the #

# result in loss. #

#############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

num_train = X.shape[0]

scores = X.dot(W)

correct_class_scores = scores[np.arange(num_train), y]

margins = np.maximum(0, scores - correct_class_scores[:, np.newaxis] + 1)

margins[np.arange(num_train), y] = 0

loss = np.sum(margins) / num_train

loss += reg * np.sum(W * W)

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

#############################################################################

# TODO: #

# Implement a vectorized version of the gradient for the structured SVM #

# loss, storing the result in dW. #

# #

# Hint: Instead of computing the gradient from scratch, it may be easier #

# to reuse some of the intermediate values that you used to compute the #

# loss. #

#############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

dW = np.zeros_like(W)

margins[margins > 0] = 1

margins[np.arange(num_train), y] -= np.sum(margins, axis=1)

dW = (X.T).dot(margins) / num_train

loss += reg * np.sum(W * W)

dW += 2 * reg * W

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

return loss, dW

# Complete the implementation of svm_loss_vectorized, and compute the gradient

# of the loss function in a vectorized way.

# The naive implementation and the vectorized implementation should match, but

# the vectorized version should still be much faster.

tic = time.time()

_, grad_naive = svm_loss_naive(W, X_dev, y_dev, 0.000005)

toc = time.time()

print('Naive loss and gradient: computed in %fs' % (toc - tic))

tic = time.time()

_, grad_vectorized = svm_loss_vectorized(W, X_dev, y_dev, 0.000005)

toc = time.time()

print('Vectorized loss and gradient: computed in %fs' % (toc - tic))

# The loss is a single number, so it is easy to compare the values computed

# by the two implementations. The gradient on the other hand is a matrix, so

# we use the Frobenius norm to compare them.

difference = np.linalg.norm(grad_naive - grad_vectorized, ord='fro')

print('difference: %f' % difference)Naive loss and gradient: computed in 0.117397s

Vectorized loss and gradient: computed in 0.015500s

difference: 0.000000Stochastic Gradient Descent

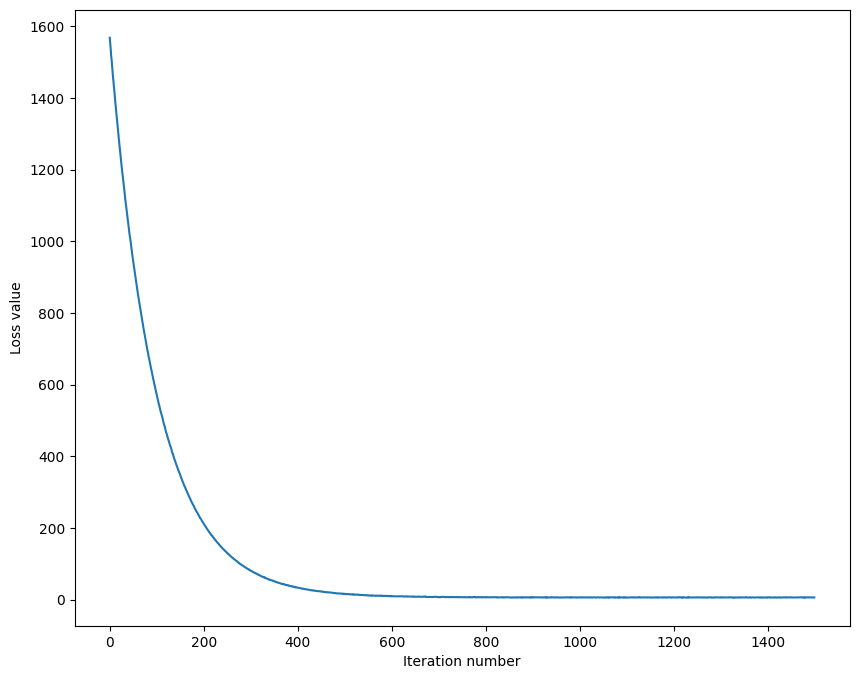

We now have vectorized and efficient expressions for the loss, the gradient and our gradient matches the numerical gradient. We are therefore ready to do SGD to minimize the loss. Your code for this part will be written inside cs231n/classifiers/linear_classifier.py.

# In the file linear_classifier.py, implement SGD in the function

# LinearClassifier.train() and then run it with the code below.

from cs231n.classifiers import LinearSVM

svm = LinearSVM()

tic = time.time()

loss_hist = svm.train(X_train, y_train, learning_rate=1e-7, reg=2.5e4,

num_iters=1500, verbose=True)

toc = time.time()

print('That took %fs' % (toc - tic))iteration 0 / 1500: loss 1568.314234

iteration 100 / 1500: loss 571.945571

iteration 200 / 1500: loss 211.613853

iteration 300 / 1500: loss 80.568856

iteration 400 / 1500: loss 33.138999

iteration 500 / 1500: loss 15.450678

iteration 600 / 1500: loss 9.318926

iteration 700 / 1500: loss 6.927247

iteration 800 / 1500: loss 6.081467

iteration 900 / 1500: loss 6.691928

iteration 1000 / 1500: loss 5.523824

iteration 1100 / 1500: loss 5.567153

iteration 1200 / 1500: loss 5.538495

iteration 1300 / 1500: loss 5.521653

iteration 1400 / 1500: loss 5.631154

That took 12.066516s# A useful debugging strategy is to plot the loss as a function of

# iteration number:

plt.plot(loss_hist)

plt.xlabel('Iteration number')

plt.ylabel('Loss value')

plt.show()

# Write the LinearSVM.predict function and evaluate the performance on both the

# training and validation set

y_train_pred = svm.predict(X_train)

print('training accuracy: %f' % (np.mean(y_train == y_train_pred), ))

y_val_pred = svm.predict(X_val)

print('validation accuracy: %f' % (np.mean(y_val == y_val_pred), ))training accuracy: 0.364224

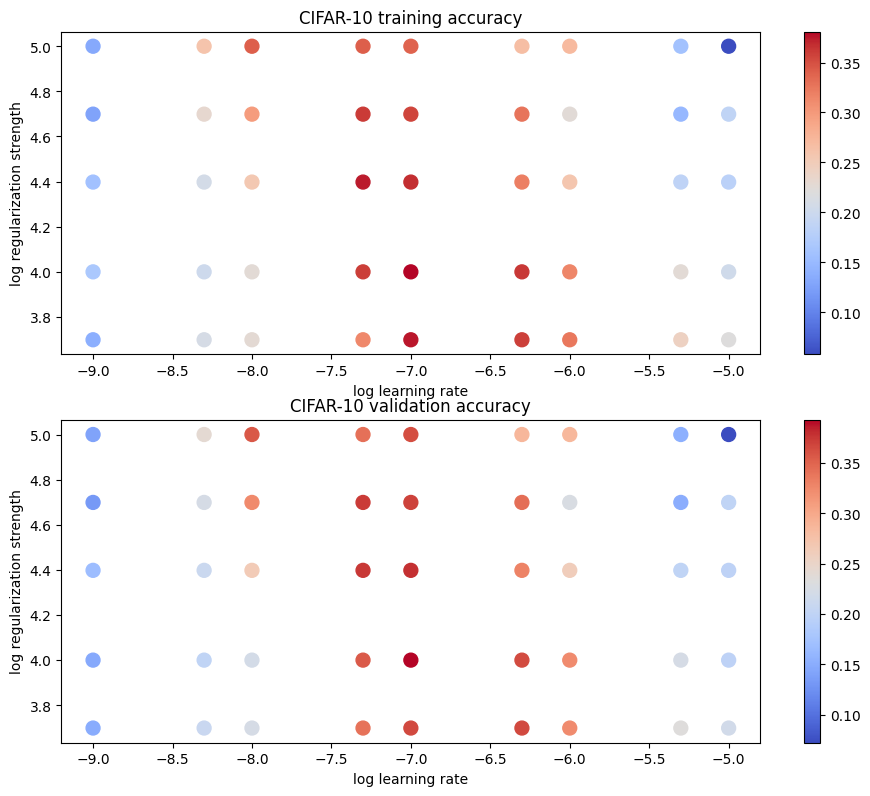

validation accuracy: 0.388000Grid Search

# Use the validation set to tune hyperparameters (regularization strength and

# learning rate). You should experiment with different ranges for the learning

# rates and regularization strengths; if you are careful you should be able to

# get a classification accuracy of about 0.39 (> 0.385) on the validation set.

# Note: you may see runtime/overflow warnings during hyper-parameter search.

# This may be caused by extreme values, and is not a bug.

# results is dictionary mapping tuples of the form

# (learning_rate, regularization_strength) to tuples of the form

# (training_accuracy, validation_accuracy). The accuracy is simply the fraction

# of data points that are correctly classified.

results = {}

best_val = -1 # The highest validation accuracy that we have seen so far.

best_svm = None # The LinearSVM object that achieved the highest validation rate.

################################################################################

# TODO: #

# Write code that chooses the best hyperparameters by tuning on the validation #

# set. For each combination of hyperparameters, train a linear SVM on the #

# training set, compute its accuracy on the training and validation sets, and #

# store these numbers in the results dictionary. In addition, store the best #

# validation accuracy in best_val and the LinearSVM object that achieves this #

# accuracy in best_svm. #

# #

# Hint: You should use a small value for num_iters as you develop your #

# validation code so that the SVMs don't take much time to train; once you are #

# confident that your validation code works, you should rerun the validation #

# code with a larger value for num_iters. #

################################################################################

# Provided as a reference. You may or may not want to change these hyperparameters

learning_rates = [1e-9, 5e-9, 1e-8, 5e-8, 1e-7, 5e-7, 1e-6, 5e-6 ,1e-5]

regularization_strengths = [5e3, 1e4, 2.5e4, 5e4, 1e5]

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

import itertools

for lr, reg in itertools.product(learning_rates, regularization_strengths):

svm = LinearSVM()

loss_hist = svm.train(X_train, y_train, learning_rate=lr, reg=reg,

num_iters=1500, verbose=False)

y_train_pred = svm.predict(X_train)

train_accuracy = np.mean(y_train == y_train_pred)

y_val_pred = svm.predict(X_val)

val_accuracy = np.mean(y_val == y_val_pred)

results[(lr, reg)] = train_accuracy, val_accuracy

if val_accuracy > best_val:

best_val = val_accuracy

best_svm = svm

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# Print out results.

for lr, reg in sorted(results):

train_accuracy, val_accuracy = results[(lr, reg)]

print('lr %e reg %e train accuracy: %f val accuracy: %f' % (

lr, reg, train_accuracy, val_accuracy))

print('best validation accuracy achieved during cross-validation: %f' % best_val)lr 1.000000e-09 reg 5.000000e+03 train accuracy: 0.137959 val accuracy: 0.149000

lr 1.000000e-09 reg 1.000000e+04 train accuracy: 0.166857 val accuracy: 0.146000

lr 1.000000e-09 reg 2.500000e+04 train accuracy: 0.158327 val accuracy: 0.167000

lr 1.000000e-09 reg 5.000000e+04 train accuracy: 0.127061 val accuracy: 0.131000

lr 1.000000e-09 reg 1.000000e+05 train accuracy: 0.133531 val accuracy: 0.140000

lr 5.000000e-09 reg 5.000000e+03 train accuracy: 0.209980 val accuracy: 0.209000

lr 5.000000e-09 reg 1.000000e+04 train accuracy: 0.201082 val accuracy: 0.201000

lr 5.000000e-09 reg 2.500000e+04 train accuracy: 0.207796 val accuracy: 0.210000

lr 5.000000e-09 reg 5.000000e+04 train accuracy: 0.231612 val accuracy: 0.223000

lr 5.000000e-09 reg 1.000000e+05 train accuracy: 0.260510 val accuracy: 0.242000

lr 1.000000e-08 reg 5.000000e+03 train accuracy: 0.227000 val accuracy: 0.223000

lr 1.000000e-08 reg 1.000000e+04 train accuracy: 0.226571 val accuracy: 0.221000

lr 1.000000e-08 reg 2.500000e+04 train accuracy: 0.255327 val accuracy: 0.265000

lr 1.000000e-08 reg 5.000000e+04 train accuracy: 0.298510 val accuracy: 0.325000

lr 1.000000e-08 reg 1.000000e+05 train accuracy: 0.341224 val accuracy: 0.357000

lr 5.000000e-08 reg 5.000000e+03 train accuracy: 0.314061 val accuracy: 0.341000

lr 5.000000e-08 reg 1.000000e+04 train accuracy: 0.360714 val accuracy: 0.356000

lr 5.000000e-08 reg 2.500000e+04 train accuracy: 0.373837 val accuracy: 0.375000

lr 5.000000e-08 reg 5.000000e+04 train accuracy: 0.360857 val accuracy: 0.374000

lr 5.000000e-08 reg 1.000000e+05 train accuracy: 0.341000 val accuracy: 0.342000

lr 1.000000e-07 reg 5.000000e+03 train accuracy: 0.375531 val accuracy: 0.366000

lr 1.000000e-07 reg 1.000000e+04 train accuracy: 0.380980 val accuracy: 0.393000

lr 1.000000e-07 reg 2.500000e+04 train accuracy: 0.367633 val accuracy: 0.378000

lr 1.000000e-07 reg 5.000000e+04 train accuracy: 0.356204 val accuracy: 0.370000

lr 1.000000e-07 reg 1.000000e+05 train accuracy: 0.338306 val accuracy: 0.363000

lr 5.000000e-07 reg 5.000000e+03 train accuracy: 0.358959 val accuracy: 0.366000

lr 5.000000e-07 reg 1.000000e+04 train accuracy: 0.362184 val accuracy: 0.365000

lr 5.000000e-07 reg 2.500000e+04 train accuracy: 0.320184 val accuracy: 0.330000

lr 5.000000e-07 reg 5.000000e+04 train accuracy: 0.327184 val accuracy: 0.344000

lr 5.000000e-07 reg 1.000000e+05 train accuracy: 0.266796 val accuracy: 0.287000

lr 1.000000e-06 reg 5.000000e+03 train accuracy: 0.325061 val accuracy: 0.323000

lr 1.000000e-06 reg 1.000000e+04 train accuracy: 0.314939 val accuracy: 0.324000

lr 1.000000e-06 reg 2.500000e+04 train accuracy: 0.257429 val accuracy: 0.261000

lr 1.000000e-06 reg 5.000000e+04 train accuracy: 0.224551 val accuracy: 0.227000

lr 1.000000e-06 reg 1.000000e+05 train accuracy: 0.270347 val accuracy: 0.286000

lr 5.000000e-06 reg 5.000000e+03 train accuracy: 0.242776 val accuracy: 0.235000

lr 5.000000e-06 reg 1.000000e+04 train accuracy: 0.226367 val accuracy: 0.223000

lr 5.000000e-06 reg 2.500000e+04 train accuracy: 0.185796 val accuracy: 0.201000

lr 5.000000e-06 reg 5.000000e+04 train accuracy: 0.148327 val accuracy: 0.150000

lr 5.000000e-06 reg 1.000000e+05 train accuracy: 0.159327 val accuracy: 0.153000

lr 1.000000e-05 reg 5.000000e+03 train accuracy: 0.220286 val accuracy: 0.218000

lr 1.000000e-05 reg 1.000000e+04 train accuracy: 0.204143 val accuracy: 0.198000

lr 1.000000e-05 reg 2.500000e+04 train accuracy: 0.182755 val accuracy: 0.198000

lr 1.000000e-05 reg 5.000000e+04 train accuracy: 0.187327 val accuracy: 0.200000

lr 1.000000e-05 reg 1.000000e+05 train accuracy: 0.057837 val accuracy: 0.072000

best validation accuracy achieved during cross-validation: 0.393000# Visualize the cross-validation results

import math

import pdb

# pdb.set_trace()

x_scatter = [math.log10(x[0]) for x in results]

y_scatter = [math.log10(x[1]) for x in results]

# plot training accuracy

marker_size = 100

colors = [results[x][0] for x in results]

plt.subplot(2, 1, 1)

plt.tight_layout(pad=3)

plt.scatter(x_scatter, y_scatter, marker_size, c=colors, cmap=plt.cm.coolwarm)

plt.colorbar()

plt.xlabel('log learning rate')

plt.ylabel('log regularization strength')

plt.title('CIFAR-10 training accuracy')

# plot validation accuracy

colors = [results[x][1] for x in results] # default size of markers is 20

plt.subplot(2, 1, 2)

plt.scatter(x_scatter, y_scatter, marker_size, c=colors, cmap=plt.cm.coolwarm)

plt.colorbar()

plt.xlabel('log learning rate')

plt.ylabel('log regularization strength')

plt.title('CIFAR-10 validation accuracy')

plt.show()

Training Best Model

# Evaluate the best svm on test set

y_test_pred = best_svm.predict(X_test)

test_accuracy = np.mean(y_test == y_test_pred)

print('linear SVM on raw pixels final test set accuracy: %f' % test_accuracy)linear SVM on raw pixels final test set accuracy: 0.384000Visualize Learned Weights

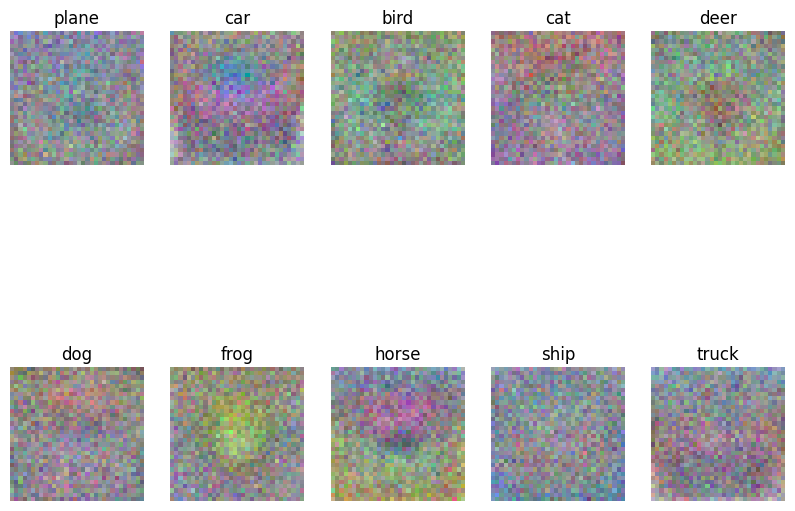

# Visualize the learned weights for each class.

# Depending on your choice of learning rate and regularization strength, these may

# or may not be nice to look at.

w = best_svm.W[:-1,:] # strip out the bias

w = w.reshape(32, 32, 3, 10)

w_min, w_max = np.min(w), np.max(w)

classes = ['plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

for i in range(10):

plt.subplot(2, 5, i + 1)

# Rescale the weights to be between 0 and 255

wimg = 255.0 * (w[:, :, :, i].squeeze() - w_min) / (w_max - w_min)

plt.imshow(wimg.astype('uint8'))

plt.axis('off')

plt.title(classes[i])

Inline question 2

Describe what your visualized SVM weights look like, and offer a brief explanation for why they look the way they do.

\(\color{blue}{\textit Your Answer:}\) fill this in